Edge AI applications are growing rapidly across industries, demanding compact, efficient, and powerful hardware accelerators. The 40 TOPS M.2 AI accelerator Module provides an ideal solution for this evolving need. Designed to bring high computational power directly to edge devices, this module ensures minimal latency and optimized performance for artificial intelligence workloads, especially in real-time scenarios.

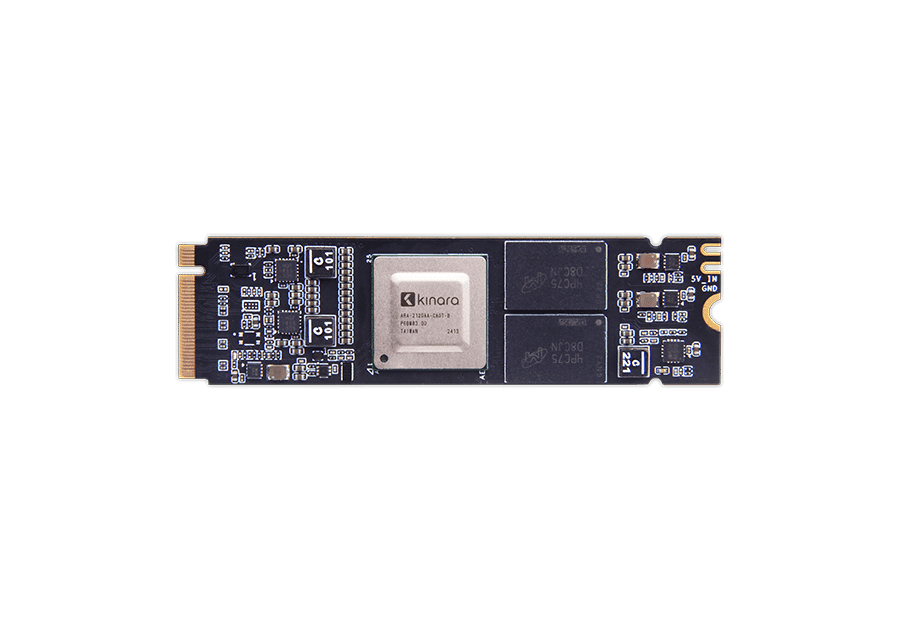

Compact and Versatile Form Factor

Built on the widely adopted M.2 form factor, the AI accelerator module is designed for easy integration into a variety of platforms, including embedded systems, industrial PCs, and edge servers. Its compact size allows it to fit into tight spaces while delivering impressive AI processing capabilities. With its M.2 interface, this module enables quick and seamless deployment, reducing development time and simplifying system architecture.

Powered by Efficient NPU Architecture

At the heart of the module lies a high-efficiency NPU (Neural Processing Unit), engineered specifically for AI tasks. Delivering up to 40 TOPS (trillions of operations per second), it offers substantial computing power for handling modern AI models. The architecture is designed to support both high-throughput inferencing and low-power operation, making it suitable for environments where energy efficiency is just as critical as performance.

Optimized for Generative and Transformer Models

The module is fine-tuned to handle the most demanding AI workloads, including large-scale transformer and generative models. These include models like LLaMA 2.0, known for their extensive parameter sets and high memory bandwidth requirements. The hardware is built to manage these computational loads with ease, ensuring that inferencing is not only fast but also accurate and reliable.

Broad AI Framework Compatibility

One of the major advantages of the AI accelerator module is its compatibility with leading AI frameworks. Developers can leverage familiar tools like TensorFlow, PyTorch, and ONNX to build and deploy models without the need for extensive hardware-specific reprogramming. This broad framework support simplifies development workflows and accelerates time to market for AI-enabled applications.

Real-World AI Use Cases

The AI accelerator module is highly suited for edge deployment in sectors like industrial automation, smart surveillance, robotics, automotive, and healthcare. For instance, in computer vision applications, it can power YOLOv8 object detection models, enabling real-time monitoring and decision-making on the edge without relying on cloud infrastructure. This not only enhances data privacy and response times but also reduces the cost associated with cloud computing.

In smart manufacturing, the module can process visual and sensor data locally to detect anomalies, predict maintenance needs, and ensure quality control. In healthcare devices, it can help run AI models for diagnostics and patient monitoring, even in offline or remote locations.

Thermal and Power Efficiency

Running Hailo AI accelerator workloads at the edge often requires efficient thermal management and low power consumption. The module is engineered to operate in challenging environments where heat dissipation is limited. With its low thermal design power, it can maintain consistent performance without active cooling in most use cases, reducing system complexity and power consumption.

Scalability and Integration

System integrators and developers benefit from the module’s scalability. Whether deploying a single unit or scaling to hundreds across a fleet of devices, its standardized M.2 interface and software compatibility ensure consistent performance. Additionally, its design supports integration with popular embedded operating systems and hardware platforms, enabling flexible deployment strategies.

Conclusion

The 40 TOPS M.2 AI Accelerator Module stands out as a high-performance, compact, and energy-efficient solution for edge AI deployment. With robust support for the latest transformer and generative AI models, compatibility with leading frameworks, and a small form factor, it is the perfect choice for businesses looking to unlock the full potential of AI at the edge. This accelerator delivers the power and flexibility needed to bring intelligent computing closer to the data source, paving the way for smarter and faster real-world applications.